Great copy isn’t written—it’s tested. The best marketers don’t rely on instinct or wordplay; they rely on data to reveal what actually drives clicks, sign-ups, and sales.

Split-test copywriting is the discipline of creating multiple versions of your message—headlines, buttons, offers, or even full emails—and letting real audience behavior decide the winner.

It’s where creativity meets precision, where bold ideas are proven or disproven by numbers.

When done right, it turns ordinary copy into high-performing assets that consistently convert.

Why Split-Test Copywriting Matters for Growth

In today’s competitive digital landscape, every message you publish competes for attention. That’s why employing split-test copywriting is not optional—it’s essential.

When you systematically create multiple versions of your copy and compare how they perform, you generate clear evidence of what resonates with your audience.

This process of variation and measurement ensures you aren’t relying solely on intuition—but on data.

Think about an email campaign where two subject lines go out to equal segments. If one subject line out-performs the other in open rate and click rate, you’d want to roll that version out broadly.

That’s the essence of split-test copywriting. It transforms a guess into a hypothesis, a hypothesis into an experiment, and a winning variant into a scalable asset.

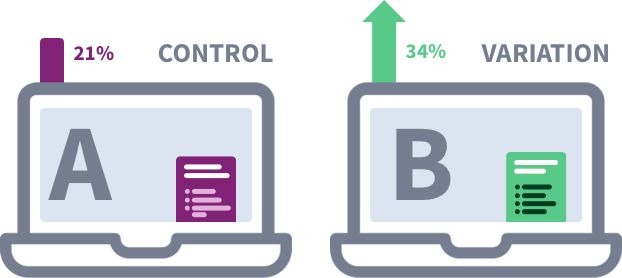

The concept of split-testing is widely used in conversion-rate optimization, where traffic is split between versions of a page or asset, metrics are tracked and compared, and the superior version becomes the champion.

Using split-test copywriting means you craft two (or more) distinct variants of your copy—headline, body, call-to-action, offer structure—and you deliver them to comparable audience segments.

You then measure which version drove better outcomes: clicks, conversions, engagement, or whatever goal you’ve set.

With each iteration, you learn what language, style, structure or offer most effectively drives your results.

The reason this is powerful: you’re controlling variables, you’re gathering real user behavior, and you’re making incremental improvements.

Rather than writing one “perfect” piece of copy and hoping it works, split-test copywriting invites you to optimize—to evolve your communication.

When you embed this discipline into your marketing, the cumulative effect is substantial.

Over time, your winning variants form a library of high-performing messages, offers and formats that reduce risk and increase conversion predictability.

That’s why investing effort into split-test copywriting should be high on your marketing agenda.

How to Design Your Split-Test Copywriting Framework

Before you dive into writing multiple variants of your copy, you need a structured approach.

That approach begins with defining your objective, setting your baseline, creating a hypothesis, designing the variant, and planning your measurement.

Using split-test copywriting without this rigor usually leads to inconclusive or misleading results.

#1. Define the Goal and Baseline

Start by articulating exactly what you want your copy to achieve. Is it more click-throughs on an email? More form-fills on a landing page? More purchases from a particular ad?

For example, you might say: “Increase click-through rate by 15 % compared to the current version.”

You then determine your baseline metric—what your current version converts at. With that baseline in hand, you can measure uplift.

#2. Formulate a Hypothesis

A good hypothesis might be: “If we emphasise cost-savings rather than feature details, then the click rate will increase by at least 10%.”

Having this hypothesis ensures you are testing a meaningful variable—not just every change under the sun.

In split-test copywriting, each variant should reflect a meaningful difference based on audience insight or past data.

#3. Identify the Variant Elements

In your split-test copywriting, decide which element to vary.

It could be headline wording, value proposition framing, call-to-action language, length of copy, tone, offer structure, etc.

As one practitioner observed, the headline often yields the biggest single lift. It’s also critical not to change too many things at once unless you’re comfortable attributing results to a bundle of changes.

#4. Plan the Traffic and Sample Size

Split-tests require adequate traffic so the results are statistically meaningful.

If you send too few visitors to each variant, you risk drawing conclusions from noise.

Tools and calculators exist to help estimate needed sample size and significance.

You also need to assign traffic randomly and ensure segments are comparable to avoid bias.

#5. Run the Test and Measure Results

Deploy your variants, let them run long enough to reach statistical confidence, then compare performance.

Important metrics may include click-through rate, conversion rate, bounce rate, engagement time, etc.

Once a winner emerges, adopt that version, document the learning, and then iterate for the next test.

This cycle of measure → learn → apply is the hallmark of disciplined split-test copywriting.

By establishing this framework, you ensure your split-test copywriting efforts are systematic, measurable and scalable.

It shifts your process from guesswork to experiment and learning, which means faster growth and more reliable copy performance.

Variant 1: Headline Changes in Split-Test Copywriting

The headline is often your first—and sometimes only—chance to capture attention.

In split-test copywriting, experimenting with headline variants is among the highest-impact actions you can take.

Because the headline is what visitors see first (whether email subject, ad headline or landing page title), it drives whether the rest of the copy has a chance to convert.

When you craft headline variants, think of different angles: emphasising benefit, addressing a pain point, asking a question, or creating contrast.

For example, one variant might lead with “Boost your conversion rate in 7 days”, another with “Why most campaigns fail to convert”.

The difference in wording can cause a materially different performance outcome.

You must also embed your core message clearly—so that the headline aligns with the rest of your copy.

If you vary the headline but the body copy fails to support it, visitors may feel the message is disconnected.

That weakens your overall performance.

In your split-test copywriting plan, create two headline variants: the control (your current headline) and the challenger (new headline).

Send equal traffic, monitor the key metric (e.g., click-through rate or conversion rate) and determine whether the challenger out-performs with statistical confidence.

As noted in one study, a headline change improved conversions by 90 % in a PPC landing page test.

That shows how headline variants can deliver large uplifts when done thoughtfully. After identifying a winning headline, document what language resonated—e.g., more direct benefit, urgency or clarity—and apply that insight to future campaigns.

Always record the headline variants used, their results and any contextual data (traffic source, device type, audience segment).

This documentation supports your future split-test copywriting efforts and builds a reservoir of winning formulas.

Variant 2: Offer Framing and Value Proposition in Split-Test Copywriting

Once the headline has drawn attention, the value proposition and offer framing keep the reader engaged and convince them to act.

In split-test copywriting, you can produce variants that frame the offer differently: focus on savings vs. benefits, guarantee vs. standard purchase, urgency vs. evergreen, etc.

For example, Variant A might emphasise “Get started now and save 20 %”. Variant B might say “Unlock steady growth in 90 days—risk-free guarantee”.

Though the core product is the same, how you position the value changes the emotional appeal and action inclination.

When planning your split-test copywriting around offer framing, ensure each variant remains credible and clear.

Don’t exaggerate claims. Your hypothesis could be: “Positioning the offer as ‘risk-free guarantee’ will generate at least a 12 % higher conversion rate than framing the offer with a time-limited discount.”

Then test accordingly.

You should also keep all other variables constant (aside from the offer framing) so you can attribute results effectively.

For instance, keep the same headline, body length, layout and imagery—only vary the value-proposition language.

That way, your split-test copywriting yields clean insights.

In documenting the results, note not just conversion uplift but also downstream behaviour (e.g., quality of leads, retention, complaint rate).

A variant that increases initial conversions might attract lower-quality leads, so evaluation should look beyond the immediate metric.

Through many cycles of variant testing in offer framing, you build a deeper understanding of how your audience perceives value—what drives them to act and what language they respond to.

This is one of the powerful long-term benefits of disciplined split-test copywriting.

Variant 3: Call-to-Action Copy and Placement in Split-Test Copywriting

Your call-to-action (CTA) is the final prompt to motivate action.

In split-test copywriting, CTA button variants can focus on wording, size, placement, design, and context.

For instance, you may test “Start your free trial” vs. “Get instant access now” or test placing the CTA near the top of the copy versus after social proof.

When designing CTA variants within your split-test copywriting plan, consider the following factors:

#1. Verb strength and clarity: Use action verbs that clearly state what the visitor will receive.

#2. Urgency or immediacy: Words like “now”, “instantly”, “today” often increase impetus.

#3. Benefit-orientation: Instead of “Submit”, try “Save my spot” or “Secure my discount”.

#4. Location and visual prominence: A CTA above the fold vs. below a testimonial may perform differently.

#5. Supporting copy: If your CTA variant adds urgency, the surrounding copy must support it.

For example, in one split-test copywriting experiment for an ad, changing the CTA from “Learn more” to “Join 1,000+ marketers now” resulted in measurable lift.

While I don’t have the exact public stat for that example, sources indicate that CTA language and placement are among the highest-impact elements to test.

In practice, your split-test copywriting might involve controlling all else (headline, offer, visuals) and only varying the CTA wording and position, tracking which version achieves the target metric (e.g., form submissions).

You then adopt the winner and continue evolving your CTA combinations. Over time this builds a library of high-performing CTAs you can reuse and adapt for new campaigns.

Variant 4: Tone, Length and Structure in Split-Test Copywriting

Beyond the obvious elements like headline and CTA, the tone, length and structural layout of your copy matter a great deal.

Variant testing around these gives deeper insight into how your audience prefers to consume your message.

Tone

Tone refers to the voice and style of your copy: formal vs. informal, authoritative vs. friendly, urgent vs. steady.

For example, one variant might use conversational language (“Ready to boost your results?”), while another uses formal, professional phrasing (“Enhance performance with our proven solution”).

In split-test copywriting, you might hypothesise: “A friendly, conversational tone will yield at least 8 % higher engagement than a formal tone in our email campaign.”

Then test accordingly.

Length

Does long-form copy perform better than short? In some cases, detailed copy that addresses objections thoroughly outperforms brief copy.

In other cases, shorter copy loads faster, engages quickly and converts better.

According to conversion-rate optimization research, sometimes reducing length increases conversions.

So in your split-test copywriting, create one long-form version and one concise version, while keeping other elements constant, then compare performance.

Structure

This involves paragraphing, sub-headings, bullet lists, social proof placement, etc. One variant might lead with a customer quote, another with a benefit list.

One might embed images early, another later. In split-test copywriting, structure variation can change how the reader flows through the copy and perceives the message.

When you run this kind of variant test, you capture deeper behavioural signals: time on page, scroll depth, engagement metrics, as well as conversion outcome.

These additional signals help you understand not just “which variant won” but “why it won”.

Over multiple rounds of testing tone, length and structure, you build a strong sense of your audience’s reading habits and preferences.

That allows you to craft future copy faster and more confidently—because you’re writing in a style your market already responds to.

Variant 5: Imagery and Media Integration in Split-Test Copywriting

While your primary focus may be text, imagery and media choices impact how the copy is received and engaged with.

In split-test copywriting, you can design variants that differ only in their media integration—not just images vs. no images, but which images, video vs. static, image placement, etc.

For example, Variant A of a landing page might open with a bold hero image of a user succeeding.

Variant B might start with a short video testimonial. Both versions share the same headline, body copy and CTA.

By measuring performance, you can identify which variant of media integration better supports your copy.

Research shows that split testing these types of page variants helps uncover not just “does media matter” but “which media element drives more action in our audience”.

In your split-test copywriting framework, you’ll treat media variation as another element to test systematically.

For instance: “Adding a 30-second video above the fold will increase conversion rate by 10 % compared to a static hero image.”

Then test, measure, evaluate.

One key point: ensure the copy context supports the media. If the variant opens with a video, the copy must refer to it and build off it.

That way your split-test copywriting remains coherent.

Document what media variant worked and why—as sometimes surprising results emerge (e.g., a simple image outperforms video because of load time on mobile).

Over time your media choices become aligned with your copy, audience behaviour, and device mix.

Variant 6: Audience Segmentation and Personalised Copy in Split-Test Copywriting

One of the most advanced yet often overlooked dimensions of split-test copywriting is audience segmentation.

Rather than sending one generic message, you design variants of your copy tailored to different segments of your audience—by behaviour, persona, stage in the funnel, channel, device, etc.

Then you compare performance across segments.

For example, you might have two variants: Variant A addresses “first-time website visitors” and emphasises trust building and trial offers.

Variant B addresses “returning visitors” and emphasises upsell or cross-sell benefits. The same core product is offered, but the framing and body copy differ to match audience mindset.

Split-test copywriting for segments requires adequate traffic and careful segmentation to avoid data bias.

But the payoff is high. As one article says: “Testing new marketing strategies by measuring real in-market behaviours at scale often includes the most important behaviour: did they take out their wallet and click ‘buy’.”

In your framework, you might test variants like: “Visiting for the first time: emphasise ‘Just for you’ and ‘No experience needed’.” vs. “Returning visitor: emphasise ‘Back for more? Get an exclusive upgrade’.”

Then measure which story resonates better, and refine your variant library accordingly.

Document the segment definitions, variant differences, and results carefully.

Over time you’ll build segment-specific winning copy assets—so your split-test copywriting becomes not just about one message but about tailored messages that speak directly to each audience slice.

Variant 7: Channel and Context Adaptation in Split-Test Copywriting

Your audience might consume your message via email, social media, landing page, ad, push notification, or SMS.

Each channel has its own constraints and expectations. Good split-test copywriting recognises that copy optimized for one channel may underperform in another.

Therefore designing channel-specific variants is essential.

For example: Variant A is copy tailored for a mobile ad with very short attention time (“Tap to get your free guide”).

Variant B is a longer-form email version (“Here’s your guide, download now and start transforming results”).

Both promote the same offer, but copy differs in length, tone and structure to suit context. By tracking performance separately, you learn channel-specific behaviours.

Another context variation: time of day, device type, traffic source.

You may test copy variants for “morning email openers” vs. “evening sms alerts”, or “desktop landing page” vs. “mobile landing page”.

In your split-test copywriting strategy, you might hypothesise: “A shorter, benefit-first message will outperform a longer narrative message when delivered via mobile ad.”

Then test and measure.

Tracking results by channel helps you refine your variant library not only by message but by deployment context.

Over time, you learn what copy variant works best for what channel.

This gives you the flexibility to deploy high-performing variants quickly and customize for context rather than starting from scratch each time.

How to Iterate and Scale Your Split-Test Copywriting Process

After running multiple rounds of variant testing, the next phase is iteration and scaling.

The goal is to build a repeatable process for split-test copywriting that yields continuous improvement and becomes part of your ongoing workflow.

Document Insights and Create a Variant Library

Every test you run generates insight: what language resonated, what user behaviour changed, which variant won, and how much lift you achieved.

Store these insights in a central location, cataloguing which variant elements performed well, for which audience and which channel.

Over time this forms your library of high-performing copy variants.

When you launch a new campaign, you can quickly adapt a proven variant rather than starting from scratch.

Set a Cadence for Continuous Testing

Effective split-test copywriting isn’t a one-time effort—it’s continuous. Set up a cadence (e.g., bi-weekly or monthly) where you identify one or two new copy tests to run.

Prioritise based on impact (e.g., high-traffic pages, key conversion steps). Use your framework: hypothesis > variants > test > learn > apply.

As you iterate, your baseline improves, and you can focus on smaller incremental gains.

Expand Tests to New Variants and Channels

Once you’ve nailed major elements (headline, offer, CTA), you can test smaller but meaningful changes (tone, structure, segmentation).

Also expand into new channels and contexts using your variant library. Your split-test copywriting output grows, and you maintain momentum.

Monitor Long-Term Impact and Quality

Don’t just look at immediate conversion uplift. Monitor downstream metrics: retention, customer value, brand sentiment, complaint rates.

A variant that drives a high initial conversion but low retention may not be a true win. Document long-term results, and refine your variant library accordingly.

Build Cross-Functional Alignment

Ensure marketing, analytics, design and copywriting teams are aligned.

Share results from your split-test copywriting process, insights learned, variant library, and schedule of upcoming tests.

This builds institutional memory and prevents redundant testing or reinventing the wheel.

Common Pitfalls and How to Avoid Them in Split-Test Copywriting

Even with the best intentions, many split-test copywriting efforts fail to generate meaningful insights.

Being aware of common pitfalls helps you avoid them and ensures your tests produce actionable results.

Insufficient Traffic or Sample Size

One of the most frequent issues is running tests with too few visitors, resulting in inconclusive data.

Without statistically reliable results, you risk adopting a variant that appears to win by chance.

Conversion-rate optimisation experts emphasise the need for sufficient traffic and a properly computed sample size.

Make sure you plan for an adequate duration and volume before declaring a winner.

Testing Too Many Variables at Once

If you change multiple elements (headline, offer, CTA, image, layout) in one variant you won’t know which change drove the result.

That makes your split-test copywriting less useful for learning. Instead, keep changes discrete, or if you test multiple changes, accept that the winner is a bundle and further tests may be needed to isolate each effect.

Neglecting External Factors

Audience source, time of day, device type and seasonal effects can all influence results. If your test traffic is not evenly distributed or biased by context, your data may be skewed.

One article cautions against assuming change in copy is the sole factor when many outside influences exist.

Always randomise traffic and keep other variables constant where possible.

Focusing Only on Superficial Changes

Changing the button colour might seem trivial but often delivers negligible gain compared to copy changes that address value, pain point or segmentation.

Some UX research warns against trivial tests that don’t move the core needle. Choose tests that align with your business goal and can deliver meaningful impact.

Forgetting to Apply Learnings

Running a test is only half the job. If you don’t document results, adopt the winning variant and apply insights to future campaigns, you lose the long-term benefit.

Good split-test copywriting means you build cumulative learning, not just one-off wins.

By being mindful of these pitfalls, you ensure your split-test copywriting efforts are efficient, insightful and scalable, rather than ad hoc or noisy.

When to Stop Testing and Adopt a Winner

One question often arises: when do you conclude a test and move the winning variant into full deployment?

While there’s no one-size-fits-all answer, a few principles help guide you in your split-test copywriting process.

Achieve Statistical Significance

Before adopting a variant, ensure the result is statistically significant. If you have low traffic or very small differences, you risk false positives.

Many A/B testing platforms include built-in confidence metrics to help determine when you’ve run enough.

Monitor for Consistency Over Time

A variant might perform well initially but trend back down.

You should monitor performance over a reasonable time period (e.g., one full business cycle or relevant funnel window) to ensure the lift holds.

With split-test copywriting, consistency matters as much as uplift.

Evaluate Downstream Impact

Before fully committing, consider downstream metrics like customer satisfaction, retention and lifetime value.

A variant that maximises conversions but reduces quality of leads may be a false win. A fuller view makes your split-test copywriting more strategic.

Document and Roll-Out

Once you’ve validated a variant, document the copy variant, key findings, traffic source, and results.

Then roll out the variant broadly, apply insights to related pages or channels, and plan your next test. This completes the cycle of your split-test copywriting process.

Adopting this disciplined approach ensures that your split-test copywriting evolves into a mature function within your marketing, rather than a sporadic experimentation.

Conclusion

When you integrate split-test copywriting into your marketing workflow, you’re elevating your copy from art to science.

You’re creating messages that are proven, not just presumed. You’re building a library of high-performing variants tailored to your audience, channel and context.

Across each variant—whether headline, offer framing, CTA, tone, media, segmentation or channel—you’re iterating toward stronger performance.

Over time, your baseline improves, your conversion rates climb, and your risk decreases.

But the key to success is consistency. Set up a repeatable framework, plan your tests, monitor the metrics, document your findings and apply what you learn.

Also avoid common mistakes: insufficient sample size, too many variables, neglecting context or downstream metrics.

If you follow this process, your split-test copywriting becomes not just an occasional tactic, but a disciplined engine of growth.

The messages you craft will not just feel right—they’ll perform, measured by the metrics that matter.